NSDT工具推荐: Three.js AI纹理开发包 - YOLO合成数据生成器 - GLTF/GLB在线编辑 - 3D模型格式在线转换 - 可编程3D场景编辑器 - REVIT导出3D模型插件 - 3D模型语义搜索引擎 - AI模型在线查看 - Three.js虚拟轴心开发包 - 3D模型在线减面 - STL模型在线切割 - 3D道路快速建模

为了构建我们的 AI 文章生成器,我们将使用 Ollama 进行 LLM 交互、使用 LangChain 进行工作流管理、使用 LangGraph 定义工作流节点以及使用 LangChain 社区库实现扩展功能。此外,对于网络搜索,我们将使用 duckduckgo-search。

1、环境搭建

首先安装和配置这些工具。

要在系统中使用 Ollama,你需要在系统中安装 Ollama 应用程序,然后在系统中下载 LLama 3.2 模型。

- 从 Ollama 官方网站下载安装程序。

- 运行安装程序并按照屏幕上的说明完成设置。Ollama 支持 MacOS 和 Windows。

- 安装后,可以使用终端运行模型:

- 打开终端。

- 导航到安装 Ollama 的目录。

- 运行以下命令列出可用模型:

ollama list。 - 要下载并运行模型,请使用:

ollama pull <model_name>和ollama run <model_name>

你可以使用 Python 中的以下 pip 命令安装其他库

!pip install langchain==0.2.12

!pip install langgraph==0.2.2

!pip install langchain-ollama==0.1.1

!pip install langsmith== 0.1.98

!pip install langchain_community==0.2.11

!pip install duckduckgo-search==6.2.132、代码实现

首先导入必须的库:

# Displaying final output format

from IPython.display import display, Markdown, Latex

# LangChain Dependencies

from langchain.prompts import PromptTemplate

from langchain_core.output_parsers import JsonOutputParser, StrOutputParser

from langchain_community.chat_models import ChatOllama

from langchain_community.tools import DuckDuckGoSearchRun

from langchain_community.utilities import DuckDuckGoSearchAPIWrapper

from langgraph.graph import END, StateGraph

# For State Graph

from typing_extensions import TypedDict

import os定义要使用的LLM模型:

# Defining LLM

local_llm = 'llama3.2'

llama3 = ChatOllama(model=local_llm, temperature=0)定义web搜索工具:

# Web Search Tool

wrapper = DuckDuckGoSearchAPIWrapper(max_results=25)

web_search_tool = DuckDuckGoSearchRun(api_wrapper=wrapper)可以使用如下命令测试工具:

# Test Run

resp = web_search_tool.invoke("current Weather in New York")

print(resp)示例结果:

"East wind around 8 mph. Partly sunny, with a high near 69. Northeast wind around 8 mph. Mostly cloudy, with a low around 59. East wind 3 to 6 mph. Mostly sunny, with a high near 73. Light and variable wind becoming south 5 to 7 mph in the afternoon. Mostly clear, with a low around 60定义 LLM 的响应生成提示:

generate_prompt = PromptTemplate(

template="""

<|begin_of_text|>

<|start_header_id|>system<|end_header_id|>

You are an AI assistant that synthesizes web search results to create engaging and informative LinkedIn posts that are clear, concise, and appealing to professionals on LinkedIn.

Make sure the tone is professional yet approachable, and include actionable insights, tips, or thought-provoking points that would resonate with the LinkedIn audience.

If relevant, include a call-to-action or a question to encourage engagement. Strictly use the following pieces of web search context to answer the question.

If you don't know, just say that you don't know.Only make direct references to material if provided in the context.

<|eot_id|>

<|start_header_id|>user<|end_header_id|>

Question: {question}

Web Search Context: {context}

Answer:

<|eot_id|>

<|start_header_id|>assistant<|end_header_id|>""",

input_variables=["question", "context"],

)

generate_chain = generate_prompt | llama3 | StrOutputParser()

# Test Run

question = "Nobel prize 2024"

context = ""

generation = generate_chain.invoke({"context": context, "question": question})

print(generation)提供的代码用于设置提示模板并将其与 LLM(称为 llama3)链接以生成响应。以下是每个部分的作用:

PromptTemplate 用于定义 LLM 的输入结构。

模板结构:

- 系统提示指示 AI 合成网络搜索结果并生成专业、信息丰富且引人入胜的 LinkedIn 帖子。

- AI 应使用网络搜索上下文来制作响应,如果信息不足,则应明确说明。

- 模板接受两个输入:

{question}和{context}。 - 这些输入被动态插入以生成 LLM 将处理的请求。

链配置(generate_chain):

generate_chain通过将generate_prompt与模型(例如 llama3)和字符串输出解析器(StrOutputParser)链接而成。- 字符串输出解析器确保以纯文本格式返回最终响应。

定义路由提示:

# Router

router_prompt = PromptTemplate(

template="""

<|begin_of_text|>

<|start_header_id|>system<|end_header_id|>

You are an expert at routing a user question to either the generation stage or web search.

Use the web search for questions that require more context for a better answer or recent events.

Otherwise, you can skip and go straight to the generation phase to respond.

You do not need to be stringent with the keywords in the question related to these topics.

Give a binary choice 'web_search' or 'generate' based on the question.

Return the JSON with a single key 'choice' with no premable or explanation.

Question to route: {question}

<|eot_id|>

<|start_header_id|>assistant<|end_header_id|>

""",

input_variables=["question"],

)

# Chain

question_router = router_prompt | llama3_json | JsonOutputParser()

# Test Run

question = "What's up?"

print(question_router.invoke({"question": question}))路由器提示模板 (router_prompt):

- 此部分使用

PromptTemplate类创建提示模板。 - 模板是格式化的文本,将动态填充特定问题。

- 它指示助手何时使用网络搜索(针对最近事件或上下文密集型问题)以及何时生成响应(针对一般或上下文无关的查询)。

- 它强调以 JSON 格式返回 web_search 或 generate 的二元选择。

链配置 (question_router):

- question_router 使用 router_prompt 作为输入,并将其与 llama3_json 模型和 JsonOutputParser 链接起来。

- 管道接受问题,使用指定的 LLM(例如 LLaMA)对其进行处理,并将结果解析为 JSON 输出,使用单个键:“choice”,以 web_search 或 generate 作为值。

定义网络搜索查询转换器提示:

# Query Transformation

query_prompt = PromptTemplate(

template="""

<|begin_of_text|>

<|start_header_id|>system<|end_header_id|>

You are an expert at crafting web search queries for research questions.

More often than not, a user will ask a basic question that they wish to learn more about; however, it might not be in the best format.

Reword their query to be the most effective web search string possible.

Return the JSON with a single key 'query' with no premable or explanation.

Question to transform: {question}

<|eot_id|>

<|start_header_id|>assistant<|end_header_id|>

""",

input_variables=["question"],

)

# Chain

query_chain = query_prompt | llama3_json | JsonOutputParser()

# Test Run

question = "What's happened recently with Gaza?"

print(query_chain.invoke({"question": question}))查询转换提示 (query_prompt):

- 此提示专为优化网络搜索查询而设计。

- 它将用户的随意或基本问题转换为有效的网络搜索字符串,可以检索更多相关信息。

- 指示 LLM 将问题重新表述为更适合搜索引擎的格式。

- 响应以 JSON 形式返回,其中包含单个键:查询,不包含任何其他文本。

链配置 (query_chain):

query_chain将query_prompt与语言模型 (llama3_json) 和JsonOutputParser相结合。- 此设置允许提示转换查询并以 JSON 格式返回输出。

使用图状态定义模块化研究代理工作流

# Graph State

class GraphState(TypedDict):

"""

Represents the state of our graph.

Attributes:

question: question

generation: LLM generation

search_query: revised question for web search

context: web_search result

"""

Question: str

generation : str

search_query : str

context : str

# Node: Generate

def generate(state):

"""

Generate answer

Args:

state (dict): The current graph state

Returns:

state (dict): New key added to state, generation, that contains LLM generation

"""

print("Step: Generating Final Response")

question = state["question"]

try:

context = state["context"]

except:

context =" "

# Answer Generation

generation = generate_chain.invoke({"context": context, "question": question})

return {"generation": generation}

# Node: Query Transformation

def transform_query(state):

"""

Transform user question to web search

Args:

state (dict): The current graph state

Returns:

state (dict): Appended search query

"""

print("Step: Optimizing Query for Web Search")

question = state['question']

gen_query = query_chain.invoke({"question": question})

search_query = gen_query["query"]

return {"search_query": search_query}

# Node: Web Search

def web_search(state):

"""

Web search based on the question

Args:

state (dict): The current graph state

Returns:

state (dict): Appended web results to context

"""

search_query = state['search_query']

print(f'Step: Searching the Web for: "{search_query}"')

# Web search tool call

search_result = web_search_tool.invoke(search_query)

return {"context": search_result}

# Conditional Edge, Routing

def route_question(state):

"""

route question to web search or generation.

Args:

state (dict): The current graph state

Returns:

str: Next node to call

"""

print("Step: Routing Query")

question = state['question']

output = question_router.invoke({"question": question})

if output['choice'] == "web_search":

print("Step: Routing Query to Web Search")

return "websearch"

elif output['choice'] == 'generate':

print("Step: Routing Query to Generation")

return "generate"GraphState 类:

GraphState 是一个 TypedDict,它定义了图形工作流中使用的状态字典的结构,它包括四个键:

- question:原始用户问题。

- generation:LLM 生成的最终答案。

- search_query:针对网络搜索优化的问题。

- context:用于生成响应的网络搜索结果。

Generate 节点 (generate):

- 此函数根据问题和上下文使用 LLM 生成最终响应。

- 它在图形状态中输出生成键。

- 打印消息:步骤:生成最终响应以指示其操作。

查询转换节点 (transform_query):

- 此节点优化用户针对网络搜索的问题。

- 调用 query_chain 并在状态中输出 search_query 键。

- 打印消息:步骤:优化网络搜索查询。

网络搜索节点 (web_search):

- 此节点使用优化查询 (search_query) 执行网络搜索。

- 使用 Web 搜索工具 (web_search_tool.invoke) 并输出状态中的上下文键。

- 打印搜索查询和消息:步骤:在 Web 上搜索:“<search_query>”。

条件路由 (route_question):

- 此函数根据 question_router 做出的决定将问题路由到 web_search 或 generate。

- 打印消息:步骤:路由查询。

- 如果选择是 web_search,它会路由到 web_search 节点并打印将查询路由到 Web 搜索。

- 如果选择是 generate,它会路由到 generate 节点并打印将查询路由到 Generation。

代码片段使用图形状态定义了模块化研究代理工作流的核心节点和决策逻辑。每个函数代表工作流中的一个不同步骤,根据要求修改共享状态并通过适当的节点路由问题。最终输出是使用上下文(如果可用)或直接通过 LLM 生成生成的结构良好的响应。

使用 StateGraph 构建基于状态的工作流

# Build the nodes

workflow = StateGraph(GraphState)

workflow.add_node("websearch", web_search)

workflow.add_node("transform_query", transform_query)

workflow.add_node("generate", generate)

# Build the edges

workflow.set_conditional_entry_point(

route_question,

{

"websearch": "transform_query",

"generate": "generate",

},

)

workflow.add_edge("transform_query", "websearch")

workflow.add_edge("websearch", "generate")

workflow.add_edge("generate", END)

# Compile the workflow

local_agent = workflow.compile()创建 StateGraph 实例(工作流):

- StateGraph 用于表示研究代理的工作流。

- 工作流使用 GraphState 类型初始化,确保所有节点都遵循定义的状态结构。

将节点添加到图表:

以下节点已添加到工作流:

- “websearch”:执行 web_search 函数以执行网络搜索。

- “transform_query”:执行 transform_query 函数以优化用户问题以进行网络搜索。

- “generate”:执行 generate 函数以使用 LLM 创建最终响应。

设置条件入口点 (workflow.set_conditional_entry_point):

- 使用 route_question 函数定义工作流的入口点。

- 它根据其决定将问题路由到两个节点之一:

- “websearch”:如果查询需要更多上下文。

- “generate”:如果不需要网络搜索。

定义工作流的边:

- 边定义节点之间的流程:

- “transform_query”->“websearch”:转换查询后,转到网络搜索。

- “websearch”->“generate”:完成网络搜索后,将结果传递给生成节点。

- “generate”->END:生成最终响应后,工作流结束。

编译工作流:

- workflow.compile() 步骤创建一个编译代理 (local_agent),可以调用该代理根据定义的工作流来处理查询。

- local_agent 将根据定义的逻辑自动执行节点并路由查询。

代码使用 StateGraph 构建基于状态的工作流,其中包含用于查询转换、网络搜索和响应生成的节点。工作流根据预定义的条件自动路由这些节点,确保以最有效的方式处理每个查询以产生高质量的答案。

定义运行代理的函数:

def run_agent(query):

output = local_agent.invoke({"question": query})

print("=======")

display(Markdown(output["generation"]))3、测试代理

示例 1:

run_agent("latest news on Claude LLM ")

Step: Routing Query

Step: Routing Query to Web Search

Step: Optimizing Query for Web Search

Step: Searching the Web for: "Claude LLM latest news."

Step: Generating Final Response

=======

Breaking Down the Latest Advancements in AI: What You Need to Know About Claude 3.5 Sonnet

Exciting news for AI enthusiasts! Anthropic, a leading research company focused on AI safety and alignment, has just released its latest model, Claude 3.5 Sonnet. This significant advancement in language model technology is designed to excel in coding tasks and set new benchmarks in the field.

What sets Claude 3.5 Sonnet apart?

This new model outperforms its predecessor, Claude 3 Opus, on various intelligence benchmarks. It also introduces Artifacts, a collaboration tool for teams to work with AI models like Claude 3.5 Sonnet. This feature is particularly exciting, as it enables seamless integration of AI into workflows.

A game-changer in computer interaction

What's truly remarkable about Claude 3.5 Sonnet is its ability to interact with computers like humans do. Using a new "Computer Use" API, this model can follow user commands to move a cursor around the screen, click on relevant locations, and input information via a virtual keyboard. This skill is currently in public beta, but it has the potential to revolutionize how we work with AI.

Key takeaways

Claude 3.5 Sonnet is a significant advancement in language model technology

It outperforms other models like OpenAI's GPT-4 and Google Gemini on various benchmarks

The model introduces Artifacts, a collaboration tool for teams to work with AI

Claude 3.5 Sonnet can interact with computers like humans do, using a new "Computer Use" API

What does this mean for you?

As AI continues to evolve, it's essential to stay informed about the latest developments. With Claude 3.5 Sonnet, we're seeing significant advancements in AI safety and alignment. This model has the potential to transform the way we work with AI, making it more accessible and user-friendly.

Stay ahead of the curve

Want to learn more about Claude 3.5 Sonnet and its capabilities? Check out Anthropic's research papers and models on their website. You can also explore how to access Claude 2.1 over API or chat with it at claude.ai.

Let's continue to push the boundaries of AI innovation! What do you think about the latest advancements in AI? Share your thoughts in the comments below!

Follow us for more updates on AI and technology!

[Your Company/Brand Name]

[Social Media Handles]示例2:

run_agent("Latest news this week on AI?")Step: Routing Query

Step: Routing Query to Web Search

Step: Optimizing Query for Web Search

Step: Searching the Web for: "latest news ai this week"

Step: Generating Final Response

=======

The Latest Buzz in AI: Exciting Developments and Challenges

As we dive into the latest news on AI, it's clear that this field is rapidly evolving and making significant strides. From generative AI video to natural voice interactions, the possibilities are endless.

Three startups have recently released new video models, each with unique strengths: Haiper's Haiper 2.0, Genmo's Mochi 1, and Meta's latest AR glasses. These advancements are not only impressive but also highlight the growing importance of AI in our daily lives.

However, as we celebrate these breakthroughs, it's essential to acknowledge the challenges that come with AI development. As Kyle Wiggers and Devin Coldewey pointed out, AI ethics is often falling by the wayside. It's crucial that we prioritize responsible AI development and ensure that its benefits are accessible to all.

On a more positive note, OpenAI has made significant strides in its latest model, GPT-4o, which promises to make ChatGPT smarter and easier to use. This update is a testament to the power of innovation and collaboration between tech giants like OpenAI and Microsoft.

The intersection of AI and journalism is also worth noting. The Financial Times has partnered with OpenAI to integrate its reliable news sources into ChatGPT, aiming to enhance the AI's accuracy and trustworthiness. This collaboration highlights the potential for AI to improve the way we consume information.

As we move forward in this rapidly evolving landscape, it's essential that we prioritize responsible AI development and consider the impact on society. What are your thoughts on the future of AI? How do you think we can balance innovation with ethics?

Let's continue the conversation! Share your thoughts in the comments below.

#AI #Innovation #Ethics #Collaboration #FutureOfWork4、构建Streamlit应用程序

设置环境

在运行 Streamlit 应用程序之前,请确保已安装所有必需的库。

打开终端并安装 streamlit

!pip install streamlit如果有 requirements.txt 文件,也可以使用以下命令安装依赖项:

pip install -r requirements.txt创建 Python 脚本文件

创建一个名为 streamlit_app.py 的新文件(或使用您喜欢的任何其他名称),并将之前提供的完整 Streamlit 代码粘贴到其中。

# Displaying final output format

from IPython.display import display, Markdown, Latex

# LangChain Dependencies

from langchain.prompts import PromptTemplate

from langchain_core.output_parsers import JsonOutputParser, StrOutputParser

from langchain_community.chat_models import ChatOllama

from langchain_community.tools import DuckDuckGoSearchRun

from langchain_community.utilities import DuckDuckGoSearchAPIWrapper

from langgraph.graph import END, StateGraph

# For State Graph

from typing_extensions import TypedDict

import streamlit as st

import os

# Defining LLM

def configure_llm():

st.sidebar.header("Configure LLM")

# Model Selection

model_options = ["llama3.2", "llama3.1", "gpt-3.5-turbo"]

selected_model = st.sidebar.selectbox("Choose the LLM Model", options=model_options, index=0)

# Temperature Setting

temperature = st.sidebar.slider("Set the Temperature", min_value=0.0, max_value=1.0, value=0.5, step=0.1)

# Create LLM Instances based on user selection

llama_model = ChatOllama(model=selected_model, temperature=temperature)

llama_model_json = ChatOllama(model=selected_model, format='json', temperature=temperature)

return llama_model, llama_model_json

# Streamlit Application Interface

st.title("Personal LinkedIn post Generator powered By Llama3.2")

llama3, llama3_json=configure_llm()

wrapper = DuckDuckGoSearchAPIWrapper(max_results=25)

web_search_tool = DuckDuckGoSearchRun(api_wrapper=wrapper)

generate_prompt = PromptTemplate(

template="""

<|begin_of_text|>

<|start_header_id|>system<|end_header_id|>

You are an AI assistant that synthesizes web search results to create engaging and informative LinkedIn post that is clear, concise, and appealing to professionals on LinkedIn.

Make sure the tone is professional yet approachable, and include actionable insights, tips, or thought-provoking points that would resonate with the LinkedIn audience.

If relevant, include a call-to-action or a question to encourage engagement. Strictly use the following pieces of web search context to answer the question.

If you don't know , just say that you don't know.Only make direct references to material if provided in the context.

<|eot_id|>

<|start_header_id|>user<|end_header_id|>

Question: {question}

Web Search Context: {context}

Answer:

<|eot_id|>

<|start_header_id|>assistant<|end_header_id|>""",

input_variables=["question", "context"],

)

# Chain

generate_chain = generate_prompt | llama3 | StrOutputParser()

# # Test Run

# question = "who is Yan Lecun?"

# context = ""

# generation = generate_chain.invoke({"context": context, "question": question})

# print(generation)

# Chain

generate_chain = generate_prompt | llama3 | StrOutputParser()

router_prompt = PromptTemplate(

template="""

<|begin_of_text|>

<|start_header_id|>system<|end_header_id|>

You are an expert at routing a user question to either the generation stage or web search.

Use the web search for questions that require more context for a better answer, or recent events.

Otherwise, you can skip and go straight to the generation phase to respond.

You do not need to be stringent with the keywords in the question related to these topics.

Give a binary choice 'web_search' or 'generate' based on the question.

Return the JSON with a single key 'choice' with no premable or explanation.

Question to route: {question}

<|eot_id|>

<|start_header_id|>assistant<|end_header_id|>

""",

input_variables=["question"],

)

# Chain

question_router = router_prompt | llama3_json | JsonOutputParser()

query_prompt = PromptTemplate(

template="""

<|begin_of_text|>

<|start_header_id|>system<|end_header_id|>

You are an expert at crafting web search queries for research questions.

More often than not, a user will ask a basic question that they wish to learn more about, however it might not be in the best format.

Reword their query to be the most effective web search string possible.

Return the JSON with a single key 'query' with no premable or explanation.

Question to transform: {question}

<|eot_id|>

<|start_header_id|>assistant<|end_header_id|>

""",

input_variables=["question"],

)

# Chain

query_chain = query_prompt | llama3_json | JsonOutputParser()

# Graph State

class GraphState(TypedDict):

"""

Represents the state of our graph.

Attributes:

question: question

generation: LLM generation

search_query: revised question for web search

context: web_search result

"""

question : str

generation : str

search_query : str

context : str

# Node - Generate

def generate(state):

"""

Generate answer

Args:

state (dict): The current graph state

Returns:

state (dict): New key added to state, generation, that contains LLM generation

"""

print("Step: Generating Final Response")

question = state["question"]

try:

context = state["context"]

except:

context=" "

# Answer Generation

generation = generate_chain.invoke({"context": context, "question": question})

return {"generation": generation}

# Node - Query Transformation

def transform_query(state):

"""

Transform user question to web search

Args:

state (dict): The current graph state

Returns:

state (dict): Appended search query

"""

print("Step: Optimizing Query for Web Search")

question = state['question']

gen_query = query_chain.invoke({"question": question})

search_query = gen_query["query"]

return {"search_query": search_query}

# Node - Web Search

def web_search(state):

"""

Web search based on the question

Args:

state (dict): The current graph state

Returns:

state (dict): Appended web results to context

"""

search_query = state['search_query']

print(f'Step: Searching the Web for: "{search_query}"')

# Web search tool call

search_result = web_search_tool.invoke(search_query)

return {"context": search_result}

# Conditional Edge, Routing

def route_question(state):

"""

route question to web search or generation.

Args:

state (dict): The current graph state

Returns:

str: Next node to call

"""

print("Step: Routing Query")

question = state['question']

output = question_router.invoke({"question": question})

if output['choice'] == "web_search":

print("Step: Routing Query to Web Search")

return "websearch"

elif output['choice'] == 'generate':

print("Step: Routing Query to Generation")

return "generate"

# Build the nodes

workflow = StateGraph(GraphState)

workflow.add_node("websearch", web_search)

workflow.add_node("transform_query", transform_query)

workflow.add_node("generate", generate)

# Build the edges

workflow.set_conditional_entry_point(

route_question,

{

"websearch": "transform_query",

"generate": "generate",

},

)

workflow.add_edge("transform_query", "websearch")

workflow.add_edge("websearch", "generate")

workflow.add_edge("generate", END)

# Compile the workflow

local_agent = workflow.compile()

def run_agent(query):

output = local_agent.invoke({"question": query})

print("=======")

return output["generation"]

user_query = st.text_input("Enter your post topic or question:", "")

if st.button("Generate post"):

if user_query:

st.write(run_agent(user_query))保存代码

将脚本文件保存在您的工作目录中。确保其命名正确,例如:streamlit_app.py。

运行 Streamlit 应用程序

在保存 streamlit_app.py 的同一目录中打开您的终端。

使用以下命令运行 Streamlit 应用程序:

streamlit run streamlit_app.py打开 Streamlit 界面

一旦应用程序开始运行,默认网络浏览器中将打开一个新选项卡,显示 Streamlit 界面。

或者,您可以手动打开浏览器并转到终端中显示的地址,通常如下所示:

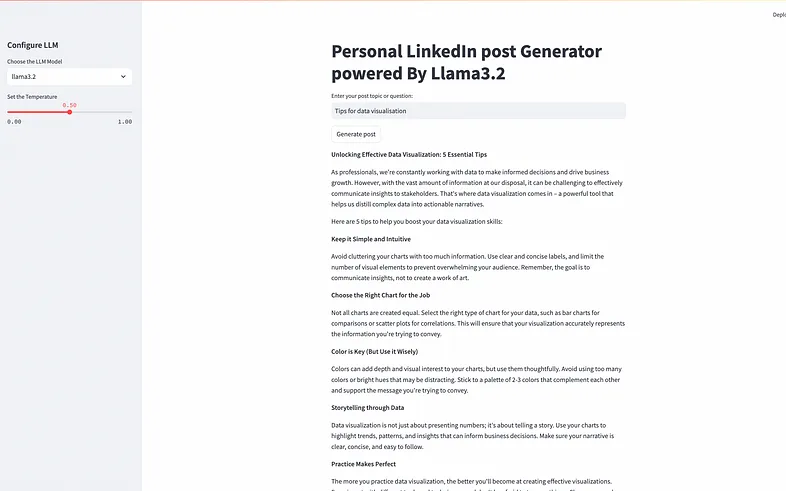

http://localhost:8501/配置 LLM 模型

- 使用侧边栏选项配置 LLM 模型:

- 选择模型(请记住,必须先从 ollama 网站下载到您的系统中)

- 使用滑块设置温度。

输入你的查询

在主界面中,您将看到一个文本输入框,标有“输入您的帖子主题或问题:”。在框中输入您的问题。

运行查询

单击“生成帖子”按钮。应用程序将使用配置的工作流程处理您的查询并显示结果。

查看输出

最终答案将自动显示。

BimAnt翻译整理,转载请标明出处